- Bad graphic card benchmark example 720p#

- Bad graphic card benchmark example drivers#

- Bad graphic card benchmark example free#

- Bad graphic card benchmark example mac#

You'll need to buy a Shader Tool or buy UV Free Shaders. Just to get decent graphics you need to buy HBAO, UBER and find a Subsurface Scattering solution. I am not sure what you are making but the only reason to use HDRP is if you are focused on maximum graphics like I am & more importantly don't want to purchase missing features because there are a LOT that standard is missing.

Bad graphic card benchmark example 720p#

Unless I'm wrong and things have changed but I would need a unity rep to say so.Īt least I can tell my peers to run the HDRP games on 720p if they absolutely need to run on it on old hardware. That's my biggest fear is that I can't get people with lower end hardware to play my game even if they run the game on very low because HDRP just won't allow it due to the unknown bottlenecking. I was told this performance bottle necking was required as part of the way the pipeline needed to work for the advanced features. I had a discussion about this as one of the problems with HDRP is there seems to be an underlying bottleneck that is eating up huge fps even with many settings turned off. My RTX 3070 gets over a thousand fps in the same Unity standard and gets 350fps in the Demo scene for HDRP. That means in a similar scene I got 100fps and in HDRP I got 20fps in the demo scene.

I myself just upgraded from a Radeon HD 5750 and I was getting 5 x the fps in Unity standard compared to Unity HDRP. It was just scary that I easily got more than 100 fps with an old Radeon HD 7770 in the same scene (and around 260 fps with a GTX 980, but of course we can't compare that to an Intel HD) But it really looks like the resolution plays a big role in this like the comment above your issue is most certainly because your Laptop is not using the dedicated GPU and you need to look into enabling it via the graphics settings, Nvidia settings and also I think some laptops will not enable the dedicated GPU unless you are plugged in. Only other thing that was enabled (which certainly comes at a cost) was the deferred rendering mode for lit shaders (there were 10 realtime lights in the scene). I didn't touch the lighting quality settings, since there were no shadows or AO in the scene anyway (so I thought that doesn't have an impact). No AA and no contact shadows or screen space shadows. I also disabled all shadows (I set shadow atlas resolutions to 1024, even though this probably has no impact if there aren't any shadows at all) and was using a basic gradient sky. I disabled almost all rendering settings I could find (motion vectors, custom passes etc), SSAO, volumetrics, reflections and decals. Using the 2019.3.0f6 and HDRP 7.1.8 I did try to select the lowest possible settings, i.e.

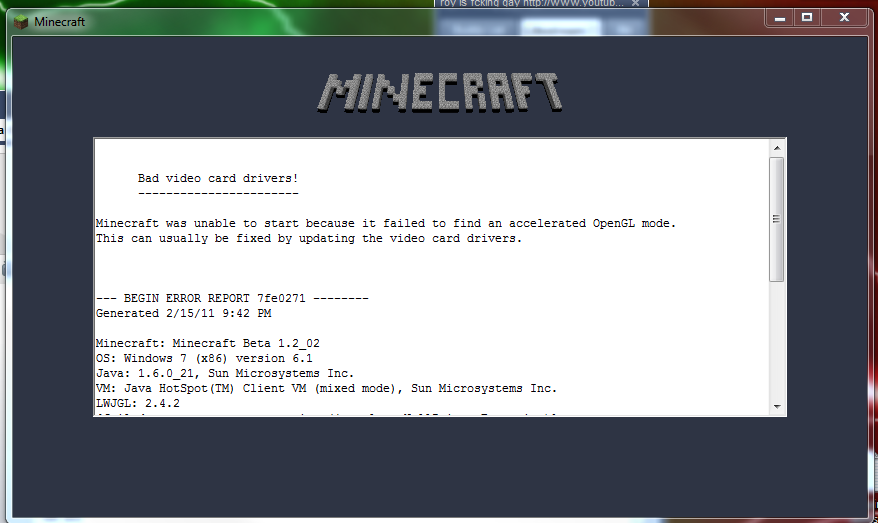

Bad graphic card benchmark example drivers#

Is this a known issue, a bug, or a limitation by design? I know the Intel drivers had serious bugs a few years ago, but I don't know if they still suffer from similar issues nowadays? Obviously they cannot expect great performance or good visuals, but if the game runs with 10 fps or less even on newer generation Intel HD adapters, it can be considered unplayable. I know the HDRP is targeting rather powerful hardware, but when shipping on PC, there are always people out there who try to run the game on a crappy Intel HD adapter. I ran the same scene on an old AMD Radeon HD 7770 (Windows 7, PhenomII X4, 8 GB RAM) and got more than 100 fps Granted, the Radeon is more powerful, but it still scares me that a game using the HDRP would be seemingly unplayable on an Intel HD adapter.ĭidn't experience any issues with other, more powerful machines.

Bad graphic card benchmark example mac#

I experienced the same issue on a Mac mini (late 2014, Intel Iris Graphics 5100), in fact performace was slightly worse there (but the graphics adapter is actually less powerful). I was testing the HDRP on various machines and apparently the framerate is extremely bad on integrated Intel HD graphics adapters I was using a basic scene with lowest settings (disabled all shadows and effects, no custom shaders etc) and never got more than 10 fps on an Intel HD Graphics 630 (Windows 10, i7-7700HQ, 16 GB RAM, DX11).

0 kommentar(er)

0 kommentar(er)